This is an article from the Shaped 1.0 documentation. The APIs have changed and information may be outdated. Go to Shaped 2.0 docs

Images

Beyond Tags: The Power of Understanding Visuals for Relevance

In today's visually driven online world, relying solely on filenames, manually assigned tags, or interaction history for search and recommendation systems leaves significant value untapped. The rich, unstructured visual information within your platform's images – product photos, user-uploaded content, article illustrations, banners – holds the key to unlocking deeper relevance. Understanding these visuals allows systems to grasp:

- True Visual Content: What objects, scenes, or styles are actually depicted in this image, beyond basic labels?

- Aesthetic Similarity: Are these two items visually complementary or stylistically aligned, even if their metadata differs?

- Visual Nuance: Can we identify subtle visual attributes like color palettes, textures, or composition that influence user preference?

- Cross-Modal Understanding: How does the visual content relate to accompanying text descriptions or user queries?

- Latent Visual Preferences: Can we infer user tastes based on the visual characteristics of items they interact with?

Transforming raw pixels into meaningful signals, or features, that machine learning models can utilize is a crucial, yet demanding, aspect of feature engineering known as Computer Vision (CV). Nail it, and you enable powerful visual search, style-based recommendations, and richer user profiles. Neglect it, and you miss a fundamental dimension of user experience and relevance. The standard path involves building complex, resource-intensive CV pipelines.

The Standard Approach: Building Your Own Visual Understanding Pipeline

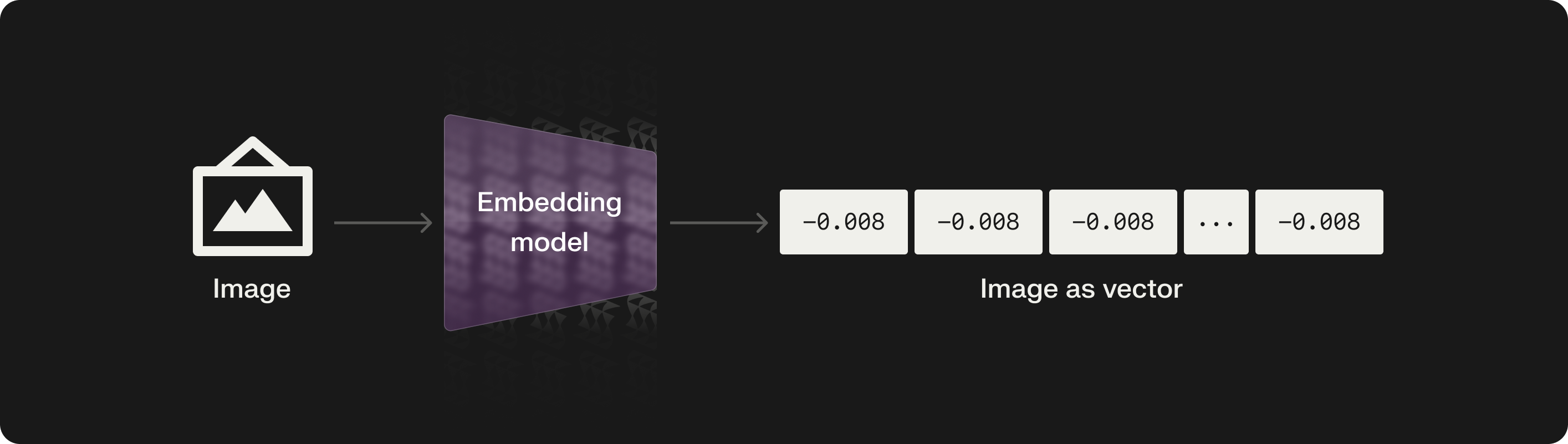

Leveraging visual data requires turning unstructured images into structured numerical representations (embeddings) that capture visual meaning. Doing this yourself typically involves a multi-stage, expert-driven process:

Step 1: Gathering and Preprocessing Image Data

- Collection: Aggregate image assets from diverse sources – CDNs, product databases, user upload storage, content management systems. Often involves handling URLs or binary data.

- Cleaning & Normalization: This is critical for consistent model input. Resize images to uniform dimensions, handle different file formats (JPG, PNG, WEBP), normalize pixel values (e.g., scale to [0, 1] or standardize based on ImageNet stats), potentially apply data augmentation (rotations, flips, color jitter) during training. Address corrupted or missing images.

- Pipelines: Build and maintain robust data pipelines to reliably ingest, validate, and preprocess potentially millions or billions of images.

The Challenge: Image data is diverse in size, quality, format, and content. Building reliable preprocessing pipelines requires significant data engineering and CV domain knowledge. Storage costs can also be substantial.

Step 2: Choosing the Right Vision Model Architecture

Selecting the appropriate CV model to generate embeddings is vital and requires navigating a rapidly evolving landscape.

- The Ecosystem (Hugging Face Hub, TIMM): Platforms like Hugging Face and libraries like timm (PyTorch Image Models) offer thousands of pre-trained vision models.

- Convolutional Neural Networks (CNNs): Architectures like ResNet, EfficientNet were dominant and remain strong baselines. They excel at capturing local patterns.

- Vision Transformers (ViTs): Increasingly the state-of-the-art, models like ViT, Swin Transformer, DeiT treat image patches like sequences, often capturing more global context. Generally require more data/compute.

- Multimodal Models (e.g., CLIP, BLIP): Models like openai/clip-vit-base-patch32 or Salesforce's BLIP variants embed both images and text into a shared space. This is crucial for text-to-image search, image-to-text search, and zero-shot classification based on textual descriptions.

- Task-Specific Models: Models trained for specific tasks like object detection (YOLO, DETR) or segmentation (U-Net) generate different kinds of features, less commonly used for general similarity embeddings but vital for specific applications.

The Challenge: Requires deep CV expertise to select the appropriate architecture and pre-trained weights based on data characteristics, desired embedding properties (local vs. global features, text alignment), downstream task (similarity, classification, search), and computational budget (ViTs can be heavy).

Step 3: Fine-tuning Models for Your Task and Data

Pre-trained models often need adaptation to perform optimally on your specific visual domain and business goals.

- Domain Adaptation: Further pre-train a model on your own large image corpus (e.g., all product photos) to help it learn the nuances of your specific visual style and object types.

- Metric Learning / Similarity Fine-tuning: Train the model using triplets (anchor, positive, negative examples) or pairs of images based on known similarity (e.g., same product, different angle vs. different product) to optimize embeddings for visual similarity search. Requires curated labeled data.

- Personalization Fine-tuning: Train models (e.g., Two-Tower architectures) where one tower processes user features/history and the other processes item image features, optimizing embeddings such that their similarity predicts user engagement (clicks, add-to-carts). Requires pairing interaction data with image data.

The Challenge: Fine-tuning vision models is computationally expensive (often requiring multi-GPU setups), needs significant ML/CV expertise, access to relevant labeled data (which can be hard to acquire for visual tasks), and extensive experimentation.

Step 4: Generating and Storing Embeddings

Once a model is ready, run inference on your image dataset to get the embedding vectors.

- Inference at Scale: Set up efficient batch inference pipelines, almost always GPU-accelerated, to process large volumes of images.

- Vector Storage: Store these high-dimensional vectors. Just like text embeddings, Vector Databases (Pinecone, Weaviate, Milvus, Qdrant, etc.) are essential for efficient storage and fast Approximate Nearest Neighbor (ANN) search to power visual similarity lookups.

The Challenge: Large-scale image inference is computationally demanding and costly. Deploying, managing, scaling, and securing a Vector Database adds significant operational overhead.

Step 5: Integrating Embeddings into Applications

Use the generated image embeddings in your live systems.

- Visual Similarity Search: Build services ("find similar images," "shop the look") that query the Vector Database in real-time.

- Feature Input: Fetch image embeddings (from the Vector DB or a feature store) in real-time to feed as input features into ranking models (LTR), personalization models, or classification systems.

- Cross-Modal Search: Use multimodal embeddings (like CLIP) to enable searching images using text queries or finding text based on image input.

The Challenge: Requires building low-latency microservices. Ensuring data consistency and performance across application DBs, image stores, Vector DBs, and rankers is complex.

Step 6: Handling Maintenance and Edge Cases

- Nulls/Missing Images: Define fallback strategies for items without images (e.g., zero vectors, default embeddings, relying on other features).

- Model Retraining & Updates: Periodically retrain models on new data, regenerate embeddings for the entire catalog, and update the Vector DB, ideally seamlessly.

- Cost Management: GPUs for training and inference, plus Vector Database hosting, significantly impact infrastructure costs.

The Shaped Approach: Automated & Flexible Visual Feature Engineering

The DIY path for image features is a major engineering investment, especially when considering latency and vector storage at scale. Shaped integrates state-of-the-art computer vision directly into its platform, offering both automated simplicity and expert-level flexibility.

How Shaped Streamlines Image Feature Engineering:

- Automated Processing (Default): Simply include image URL columns (e.g.,

product_image_url,thumbnail_url) in yourfetch.itemsquery. Shaped automatically fetches these images, preprocesses them, and uses its built-in advanced vision models (often multimodal like CLIP) to generate internal representations (embeddings). - Native Integration: These visual-derived features are natively combined with collaborative signals (user interactions), text features, and other metadata within Shaped's unified ranking models. For many common relevance tasks, you don't need to manage image fetching, preprocessing, embedding generation, Vector Databases, or feature joining manually.

- Implicit Fine-tuning: Shaped's training process automatically optimizes the use of visual features alongside behavioral and textual signals to improve relevance for your specific objectives (clicks, conversions, engagement).

- Flexibility via Hugging Face Integration (Multimodal Focus): For users needing specific visual capabilities or more control, Shaped allows you to override the default vision/multimodal model. By setting the

language_model_nameparameter in your model YAML (which often handles both text and image modalities when using multimodal models), you can specify compatible models from Hugging Face, like different CLIP variants or other multimodal architectures.- Use Cases: Select specific CLIP models optimized for your domain, use variants with different vision backbones (e.g., ViT vs. ResNet), ensure consistency if you use a specific embedding elsewhere.

- How it Works: Shaped downloads the specified model and uses it to generate internal embeddings for the image URLs you provide (as well as text embeddings if text fields if present). These embeddings are seamlessly integrated into Shaped's ranking policies.

- Managed Infrastructure & Scale: Shaped transparently handles the underlying compute (including GPUs essential for modern vision models), image fetching/storage during processing, and serving infrastructure for both default and user-specified models.

- Graceful Handling of Missing Data: Designed to handle items with missing image URLs without requiring manual imputation or breaking the system.

Leveraging Image Features with Shaped

Let's see how easy it is to incorporate image features, both automatically and by specifying a multimodal model.

Goal 1: Automatically use product images to improve recommendations.

Goal 2: Explicitly use OpenAI's CLIP model for joint text/image understanding.

1. Ensure Data is Connected:

Assume item_metadata (with product_image_url, title, description) and user_interactions are connected.

2. Define Shaped Models (YAML):

- Example 1: Automatic Image Handling

model:

name: auto_image_recs

connectors:

- name: items

type: database

id: items_source

- name: events

type: event_stream

id: events_source

fetch:

items: |

SELECT

item_id, title, description,

product_image_url, # <-- Just include the image URL field

category, price

FROM items_source

events: |

SELECT

user_id, item_id, event_type,

event_timestamp

FROM events_source

- Example 2: Specifying a Hugging Face Model (CLIP)

model:

name: clip_recs_hf

language_model_name: openai/clip-vit-base-patch32 # <-- Specify the desired Hugging Face multimodal model

connectors:

- name: items

type: database

id: items_source

- name: events

type: event_stream

id: events_source

fetch:

items: |

SELECT

item_id, title, description, # Shaped encodes text using OpenAI’s CLIP model

product_image_url, # Shaped encodes image using OpenAI’s CLIP model

category, price

FROM items_source

events: |

SELECT

user_id, item_id, event_type,

event_timestamp

FROM events_source

3. Create the Models & Monitor Training:

shaped create-model --file automatic_image_model.yaml

shaped create-model --file clip_hf_model.yaml

# Monitor both models until their status is ACTIVE

shaped view-model --model-name auto_image_recs

shaped view-model --model-name clip_recs_hf

4. Use Standard Shaped APIs:

Call rank, similar_items, etc., using the appropriate model name. The API call remains the same, but the relevance calculations are now deeply informed by visual understanding (either Shaped's default or your specified CLIP model), often in conjunction with text and behavior.

- Python

- JavaScript

from shaped import Shaped

# Initialize the Shaped client

shaped_client = Shaped()

# Get recommendations using the default image model

response_auto = shaped_client.rank(

model_name='auto_image_recs',

user_id='USER_1',

limit=10

)

# Get recommendations using the specified CLIP HF model

response_hf = shaped_client.rank(

model_name='clip_recs_hf',

user_id='USER_2',

limit=10

)

# Print the recommendations

if response_auto and response_auto.metadata:

print("Recommendations using default image model:")

for item in response_auto.metadata:

print(f"- {item['title']} (Image URL: {item['product_image_url']})")

if response_hf and response_hf.metadata:

print("Recommendations using CLIP HF model:")

for item in response_hf.metadata:

print(f"- {item['title']} (Image URL: {item['product_image_url']})")

const { Shaped } = require('@shaped/shaped');

// Initialize the Shaped client

const shapedClient = new Shaped();

// Get recommendations using the default image model

shapedClient.rank({

modelName: 'auto_image_recs',

userId: 'USER_1',

limit: 10

}).then(responseAuto => {

if (responseAuto && responseAuto.metadata) {

console.log("Recommendations using default image model:");

responseAuto.metadata.forEach(item => {

console.log(`- ${item.title} (Image URL: ${item.product_image_url})`);

});

}

});

// Get recommendations using the specified CLIP HF model

shapedClient.rank({

modelName: 'clip_recs_hf',

userId: 'USER_2',

limit: 10

}).then(responseHF => {

if (responseHF && responseHF.metadata) {

console.log("Recommendations using CLIP HF model:");

responseHF.metadata.forEach(item => {

console.log(`- ${item.title} (Image URL: ${item.product_image_url})`);

});

}

});

Conclusion: Harness Visual Power, Minimize CV Pain

Visual data is indispensable for modern relevance, but extracting its value traditionally demands significant CV expertise, complex pipelines, expensive infrastructure (GPUs, Vector DBs), and ongoing maintenance.

Shaped transforms visual feature engineering. Its automated approach lets you benefit from advanced computer vision simply by providing image URLs. For enhanced control, the seamless Hugging Face integration provides access to powerful multimodal models like CLIP with minimal configuration. In both cases, Shaped manages the underlying complexity—fetching, preprocessing, embedding, storing, and integrating—allowing you to focus on creating visually compelling and relevant user experiences, not on building intricate CV systems from scratch.

Ready to unlock the power of your image data for superior search and recommendations?

Request a demo of Shaped today to see how easily you can leverage visual features. Or, start exploring immediately with our free trial sandbox.